Head Start teachers in Minnesota were convinced, by their shared experience, of the value of their approach to measuring results of their work with young children: authentic assessment of children's developmental progress.

Head Start teachers in Minnesota were convinced, by their shared experience, of the value of their approach to measuring results of their work with young children: authentic assessment of children's developmental progress.

But what did they really know about the quality of information collected through authentic assessment? And could they determine, as Head Start Program Performance Standards require, that authentic assessment effectively informs instruction and monitors children's progress? The Minnesota Head Start Association (MHSA) set out to answer these questions, and in the process, it has created a statewide learning community that has not only studied the value of authentic assessment but has also built a state Head Start database that combines assessment, demographic, and classroom information.

MHSA Executive Director Gayle Kelly states the community's ambitious goal: "We want to set the model for what an early childhood data system should look like."

Assessing assessment

The MHSA had previously created learning communities around oral health and early childhood mental health. To explore the assessment process and its value in strengthening classroom practice, the association sought support from the Minnesota Early Learning Foundation. With grant resources, it convened a quality assessment group with lead teachers and education coordinators representing 25 grantees, about three-fourths of all those in the state. In addition to the value of regular networking and access to expert consultation, the participating agencies benefited from a state license arrangement that gave them access to two online assessment tools—Creative Curriculum and Work Sampling System®—at significantly reduced cost.

The project sought to establish a statewide online assessment system that would allow the MHSA to aggregate data from more than 5,000 of the 17,000 children enrolled in Minnesota's Head Start programs. But it soon turned its attention to the problem of data quality, and the demands authentic assessment places on teachers' observational and computer skills. Although close to 500 Head Start staff were trained in the two instruments, neither of the tools offered an affordable or efficient way to measure the reliability of teachers' ratings.

The group addressed the problem by working with the University of Minnesota Center for Early Education and Development to create and pilot a child outcomes assessment quality review instrument. This "fidelity checklist" was tested with data from 88 teachers, and it revealed that the quality and completeness of teachers' data were lower than expected (receiving an average score of 9 out of 15 possible points).

"Assessing the quality of teachers' assessment is not a straightforward process but is especially critical," the MHSA concluded. "We should be extremely cautious about making high stakes decisions until more is understood about the quality of the data being used to hold programs accountable." Adds Kelly, "The data analysis helped us understand the importance of having good data systems and quality checks."

Kelly says one bonus of the data quality work has been the "growth in education coordinators' abilities regarding data collection and analysis. These are people who are way more relationship-oriented. The most important thing we've done is meet that skill deficit. Now they understand when the data looks wrong—when they see patterns that just don't make sense."

Assessing child progress

Now more experienced in matters of data assessment and quality, and continuing the fidelity checks, the MHSA in the spring of 2011 began creating the Minnesota Head Start database to inform local programs' efforts to set and meet school readiness goals. A partnership of the MSHA and the University of Minnesota Human Capitol Research Center (HCRC), the School Readiness Goals Project convened 23 programs to work together to study child progress in relation to the "influencing factors" of child demographic characteristics, family characteristics, teacher characteristics, and classroom and program characteristics. Beginning with the 2010-11 school year, 18 of the 23 programs turned over data collected through online assessment tools including Teaching Strategies GOLD, Work Sampling Online, and Online COR.

As with the assessment group, the work encountered data issues such as the challenge to program staff of pulling demographic and other data out of software packages and the fact that many key variables—ranging from mental health diagnosis to teacher qualifications—were not tracked electronically. But the project did launch a database that combined child, family, teacher, and classroom characteristics with assessment data across three tools for 2,500 children. It examined alignment of assessment tools and determined which variables were predictive from fall to spring of the school/program year. And with 2011-12 data, it established benchmarks for child outcomes and began tracking child progress by assessment tool.

"The momentum is there," Kelly reports, adding that local grantees worked hard to make the data-merge happen quickly. "The learning community strove to set higher expectations and challenge each other in continuous improvement."

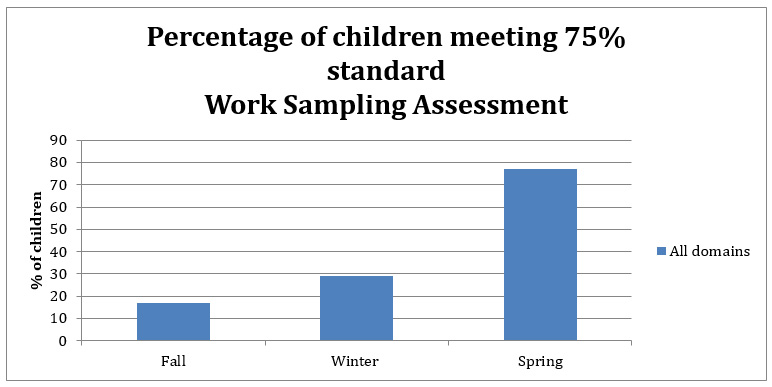

The School Readiness Goals Project has kept a close eye on one standard for the Minnesota version of the P4 from the Work Sampling System: "percent proficient at 75%." This is based on an HCRC finding that children achieving 75% of the total points on that instrument at the beginning of kindergarten were able to pass Minnesota's achievement tests in math and literacy when they reached third grade. The following chart shows HCRC's determination of the share of 4-year-olds in 2010-11 who met an overall proficiency rate of 75% based on the total number of points for each assessment.

Child Assessment Results as an Indicator of School Readiness

Another recommendation from HCRC is to follow the children forward into their K-12 experiences, and at a minimum, to identify the level of proficiency needed for the instruments other than the WSS Minnesota P4.

Next steps

The MHSA has managed to build its learning community around data for a cost of less than $50,000—cobbled together from its initial foundation grant, a contract with the state Department of Education, and contributions from participating programs and the individuals that work for them. The Head Start grantees also provided a great deal of technical assistance to the project in securing data-sharing agreements and finalizing data sets in the spring of 2011. "This is the bare bones of what it takes to create a state database," Kelly says.

Nonetheless, the MHSA is moving ahead with plans to expand the child database to include more explanatory variables around family engagement and teacher qualifications, to examine child progress for children with different developmental trajectories at enrollment, and to follow them into K-12 data systems.

The Minnesota Department of Education is also supporting a student information system technical assistance work group to help grantees manage their child and family administrative data in a more coordinated approach with local grantee leadership. The resources may be bare bones, but they continue to support work that informs program design, quality assessment, and successful instructional practices for Minnesota children.

(This profile is based on interviews conducted in April 2012.)

Last Updated: May 21, 2024